A new camera system can can reconstruct room-size scenes and moving objects that are hidden around a corner, researchers report.

The technology could one day help autonomous cars and robots operate even more safely than they would with human guidance.

David Lindell, a graduate student in electrical engineering at Stanford University, donned a high visibility tracksuit and got to work, stretching, pacing, and hopping across an empty room. Through a camera aimed away from Lindell—at what appeared to be a blank wall—his colleagues could watch his every move.

That’s because a high-powered laser was scanning him and the camera’s advanced sensors and processing algorithm captured and reconstructed the single particles of light he reflected onto the walls around him.

“People talk about building a camera that can see as well as humans for applications such as autonomous cars and robots, but we want to build systems that go well beyond that,” says Gordon Wetzstein, an assistant professor of electrical engineering. “We want to see things in 3D, around corners, and beyond the visible light spectrum.”

The camera builds on previous around-the-corner cameras from the team. It’s able to capture more light from a greater variety of surfaces, see wider and farther away, and is fast enough to monitor out-of-sight movement—such as Lindell’s calisthenics. Someday, the researchers hope superhuman vision systems could help autonomous cars and robots operate even more safely than they would with human guidance.

Keep it simple

Keeping their system practical is a high priority for the researchers The hardware they chose, the scanning and image processing speeds, and the style of imaging are already common in autonomous car vision systems.

Previous systems for viewing scenes outside a camera’s line of sight relied on objects that either reflect light evenly or strongly. But real-world objects, including shiny cars, fall outside these categories, so this system can handle light bouncing off a range of surfaces, including disco balls, books, and intricately textured statues.

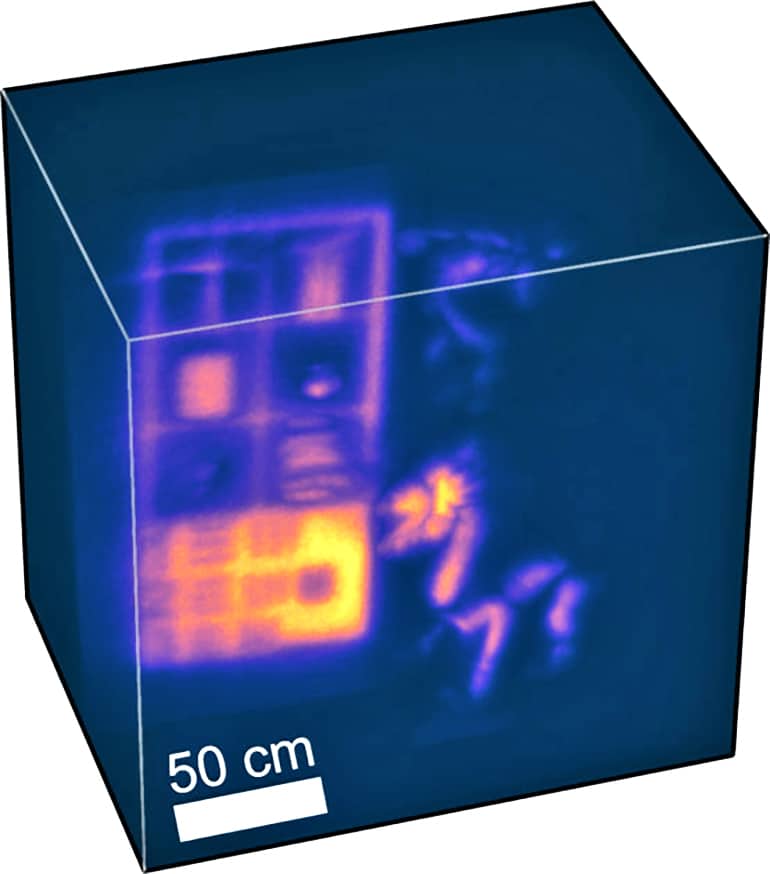

Central to the advance was a laser 10,000 times more powerful than what the researchers used a year ago. The laser scans a wall opposite the scene of interest and that light bounces off the wall, hits the objects in the scene, bounces back to the wall, and to the camera sensors.

“With this hardware, we can basically slow down time and reveal these tracks of light. It almost looks like magic.”

By the time the laser light reaches the camera only specks remain, but the sensor captures every one, sending it along to a highly efficient algorithm the team developed that untangles these echoes of light to decipher the hidden tableau.

“When you’re watching the laser scanning it out, you don’t see anything,” Lindell says. “With this hardware, we can basically slow down time and reveal these tracks of light. It almost looks like magic.”

The system can scan at four frames per second and can reconstruct a scene at speeds of 60 frames per second on a computer with a graphics processing unit, which enhances graphics processing capabilities.

Finding inspiration

To advance their algorithm, the team looked to other fields for inspiration. The researchers were particularly drawn to seismic imaging systems—which bounce sound waves off underground layers of earth to learn what’s beneath the surface—and reconfigured their algorithm to likewise interpret bouncing light as waves emanating from the hidden objects.

The result was the same high-speed and low memory usage with improvements in their abilities to see large scenes containing various materials.

“There are many ideas being used in other spaces—seismology, imaging with satellites, synthetic aperture radar—that are applicable to looking around corners,” says Matthew O’Toole, an assistant professor at Carnegie Mellon University who was previously a postdoctoral fellow in Wetzstein’s lab. “We’re trying to take a little bit from these fields and we’ll hopefully be able to give something back to them at some point.”

Humble beginning

Being able to see real-time movement from otherwise invisible light bounced around a corner was a thrilling moment for the team, but a practical system for autonomous cars or robots will require further enhancements.

“It’s very humble steps. The movement still looks low-resolution and it’s not super-fast but compared to the state-of-the-art last year it is a significant improvement,” Wetzstein says. “We were blown away the first time we saw these results because we’ve captured data that nobody’s seen before.”

The team hopes to move toward testing their system on autonomous research cars, while looking into other possible applications, such as medical imaging that can see through tissues.

Among other improvements to speed and resolution, they’ll also work at making their system even more versatile to address challenging visual conditions that drivers encounter, such as fog, rain, sandstorms, and snow.

Researchers will present the camera system at the SIGGRAPH 2019 conference.

The National Science Foundation, a Terman Faculty Fellowship, a Sloan Fellowship, the King Abdullah University of Science and Technology, the Defense Advanced Research Projects Agency, the US Army Research Laboratory, the Center for Automotive Research at Stanford, and a Stanford Graduate Fellowship funded the work.

Source: Stanford University