The option of receiving a skin-cancer diagnosis by smartphone could save lives, say researchers.

It’s scary enough making a doctor’s appointment to see if a strange mole could be cancerous. Imagine, for example, that you were in that situation while also living far away from the nearest doctor, unable to take time off work, and not sure if you had the money to cover the cost of the visit.

Universal access to health care was on the minds of computer scientists when they set out to create an artificially intelligent diagnosis algorithm for skin cancer, making a database of nearly 130,000 skin disease images and training their algorithm to visually diagnose potential cancer. From the very first test, it performed with inspiring accuracy.

Should doctors routinely screen for melanoma?

“We realized it was feasible, not just to do something well, but as well as a human dermatologist,” says Sebastian Thrun, an adjunct professor in the Stanford Artificial Intelligence Laboratory. “That’s when our thinking changed. That’s when we said, ‘Look, this is not just a class project for students, this is an opportunity to do something great for humanity.'”

A diagnosis competition

The final product, the subject of a paper in the journal Nature, was tested against 21 board-certified dermatologists. In its diagnoses of skin lesions, which represented the most common and deadliest skin cancers, the algorithm matched the performance of dermatologists.

Every year there are about 5.4 million new cases of skin cancer in the United States, and while the five-year survival rate for melanoma detected in its earliest states is around 97 percent, that drops to approximately 14 percent if it’s detected in its latest stages. Early detection could likely have an enormous impact on skin cancer outcomes.

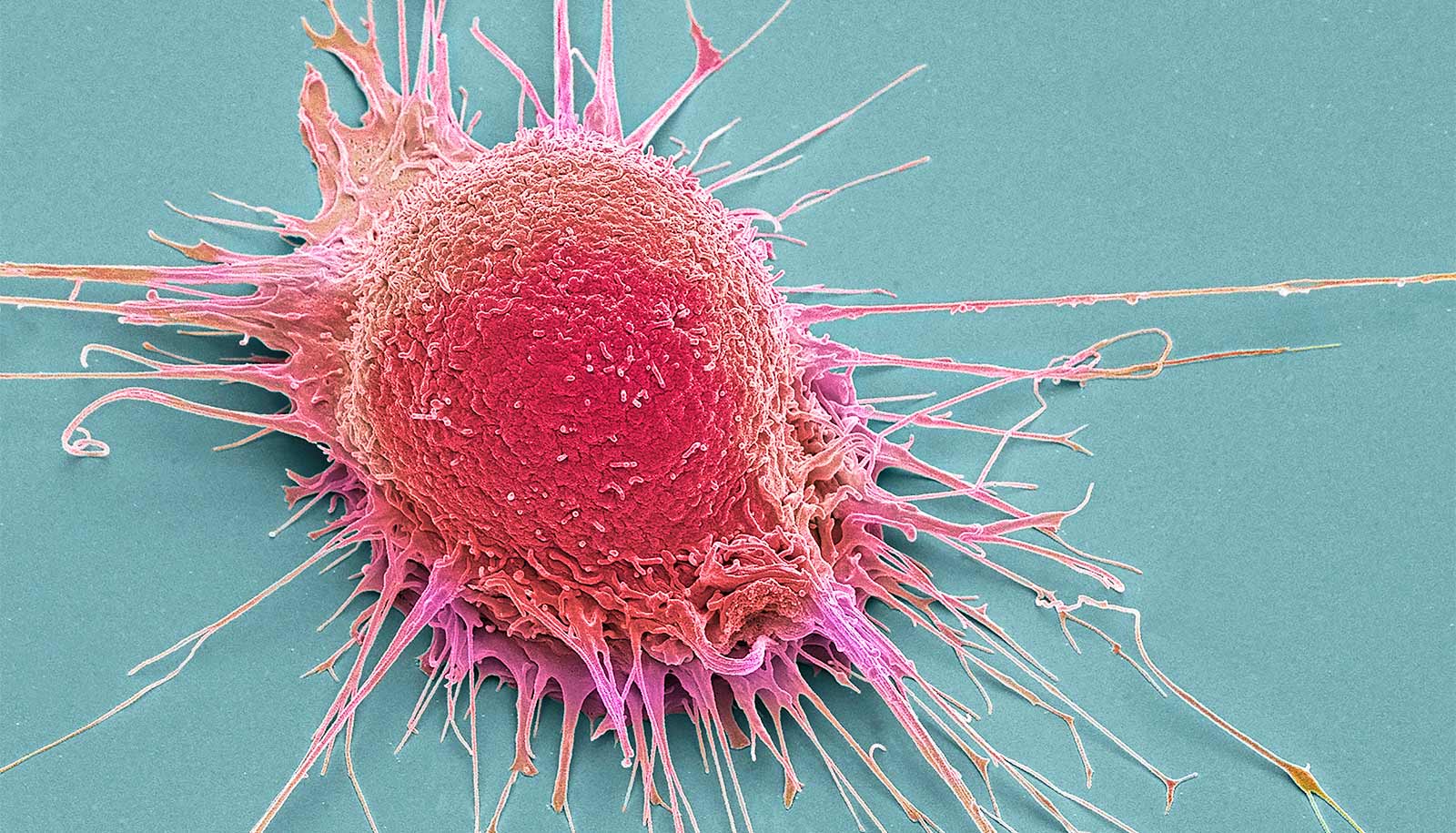

Diagnosing skin cancer begins with a visual examination. A dermatologist usually looks at the suspicious lesion with the naked eye and with the aid of a dermatoscope, which is a handheld microscope that provides low-level magnification of the skin. If these methods are inconclusive or lead the dermatologist to believe the lesion is cancerous, a biopsy is the next step.

1.28 million images

Bringing this algorithm into the examination process follows a trend in computing that combines visual processing with deep learning, a type of artificial intelligence modeled after neural networks in the brain.

Deep learning has a decades-long history in computer science but it only recently has been applied to visual processing tasks. The essence of machine learning, including deep learning, is that a computer is trained to figure out a problem rather than having the answers programmed into it.

“We made a very powerful machine learning algorithm that learns from data,” says Andre Esteva, co-lead author of the paper and a graduate student in Thrun’s lab. “Instead of writing into computer code exactly what to look for, you let the algorithm figure it out.”

The algorithm was fed each image as raw pixels with an associated disease label. Compared to other methods for training algorithms, this one requires very little processing or sorting of the images prior to classification, allowing the algorithm to work off a wider variety of data.

Algorithm learns to tell apart types of cancer cells

Rather than building an algorithm from scratch, researchers began with an algorithm developed by Google that was already trained to identify 1.28 million images from 1,000 object categories. While it was primed to be able to differentiate cats from dogs, the researchers needed it to know a malignant carcinoma from a benign seborrheic keratosis.

“There’s no huge dataset of skin cancer that we can just train our algorithms on, so we had to make our own,” says Brett Kuprel, co-lead author of the paper and a graduate student in the Thrun lab. “We gathered images from the internet and worked with the medical school to create a nice taxonomy out of data that was very messy—the labels alone were in several languages, including German, Arabic, and Latin.”

After going through the necessary translations, the researchers collaborated with dermatologists at Stanford Medicine, and coauthor Helen M. Blau, professor of microbiology and immunology. Together, the team worked to classify the hodgepodge of internet images. Many were varied in terms of angle, zoom, and lighting. In the end, they amassed about 130,000 images of skin lesions representing over 2,000 different diseases.

During testing, the researchers used only high-quality, biopsy-confirmed images provided by the University of Edinburgh and the International Skin Imaging Collaboration Project that represented the most common and deadliest skin cancers—malignant carcinomas and malignant melanomas.

Sensitivity-specificity curve

The 21 dermatologists were asked whether, based on each image, they would proceed with biopsy or treatment, or reassure the patient. The researchers evaluated success by how well the dermatologists were able to correctly diagnose both cancerous and non-cancerous lesions in over 370 images.

The algorithm’s performance was measured through the creation of a sensitivity-specificity curve, where sensitivity represented its ability to correctly identify malignant lesions and specificity represented its ability to correctly identify benign lesions. It was assessed through three key diagnostic tasks: keratinocyte carcinoma classification, melanoma classification, and melanoma classification when viewed using dermoscopy.

In all three tasks, the algorithm matched the performance of the dermatologists with the area under the sensitivity-specificity curve amounting to at least 91 percent of the total area of the graph.

An added advantage of the algorithm is that, unlike a person, the algorithm can be made more or less sensitive, so its response can be tuned depending on what is being assessed.

Although this algorithm currently exists on a computer, the team would like to make it smartphone compatible.

Drug combo shows promise for skin cancer ‘in transit’

“My main eureka moment was when I realized just how ubiquitous smartphones will be,” says Esteva. “Everyone will have a supercomputer in their pockets with a number of sensors in it, including a camera. What if we could use it to visually screen for skin cancer? Or other ailments?”

The team believes it will be relatively easy to transition the algorithm to mobile devices but there still needs to be further testing in a real-world clinical setting.

“Advances in computer-aided classification of benign versus malignant skin lesions could greatly assist dermatologists in improved diagnosis for challenging lesions and provide better management options for patients,” says Susan Swetter, professor of dermatology and director of the Pigmented Lesion and Melanoma Program at the Stanford Cancer Institute, and another coauthor of the paper.

“However, rigorous prospective validation of the algorithm is necessary before it can be implemented in clinical practice, by practitioners and patients alike.”

Source: Stanford University