A new breakthrough could enable lightweight, inexpensive multispectral cameras for uses such as cancer surgery, food safety inspection, and precision agriculture.

Researchers have demonstrated photodetectors that could span an unprecedented range of light frequencies. They achieved this feat using tailored electromagnetic materials to create on-chip spectral filters.

A typical camera only captures visible light, which is a small fraction of the available spectrum. Other cameras might specialize in infrared or ultraviolet wavelengths, for example, but few can capture light from disparate points along the spectrum. And those that can suffer from a myriad of drawbacks, such as complicated and unreliable fabrication, slow functional speeds, bulkiness that can make them difficult to transport, and costs up to hundreds of thousands of dollars.

As reported today in the journal Nature Materials, Duke University researchers demonstrate a new type of broad-spectrum photodetector that can be implemented on a single chip, allowing it to capture a multispectral image in a few trillionths of a second and cost just tens of dollars.

The technology is based on physics called plasmonics—the use of nanoscale physical phenomena to trap certain frequencies of light.

“It wasn’t obvious at all that we could do this,” says Maiken Mikkelsen, associate professor of electrical and computer engineering at Duke University. “It’s quite astonishing actually that not only do our photodetectors work in preliminary experiments, but we’re seeing new, unexpected physical phenomena that will allow us to speed up how fast we can do this detection by many orders of magnitude.”

Tiny silver cubes

Mikkelsen, graduate student Jon Stewart, and their team fashioned silver cubes just a hundred nanometers wide and placed them on a transparent film only a few nanometers above a thin layer of gold. When light strikes the surface of a nanocube, it excites the silver’s electrons, trapping the light’s energy—but only at a specific frequency.

The size of the silver nanocubes and their distance from the base layer of gold determine that frequency, while the amount of light absorbed can be tuned by controlling the spacing between the nanoparticles. By precisely tailoring these sizes and spacings, researchers can make the system respond to any electromagnetic frequency they want.

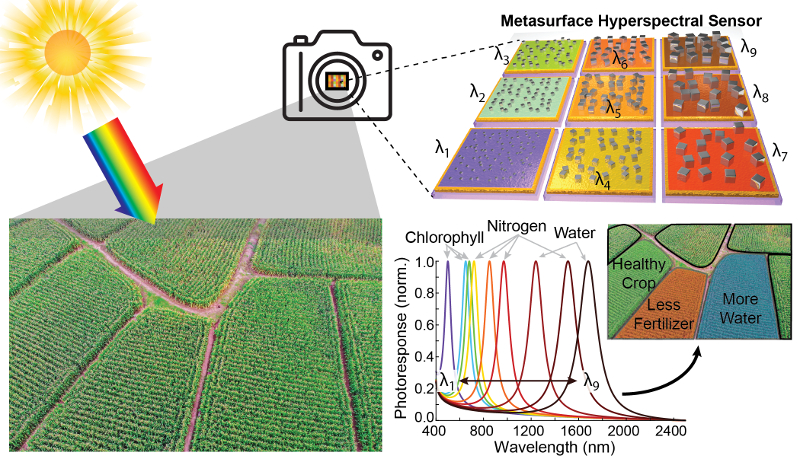

To harness this fundamental physical phenomenon for a commercial multispectral camera, researchers would need to fashion a grid of tiny, individual detectors, each tuned to a different frequency of light, into a larger “superpixel.”

In a step toward that end, the team demonstrates four individual photodetectors tailored to wavelengths between 750 and 1900 nanometers. The plasmonic metasurfaces absorb energy from incoming light and heat up, creating an electric voltage in a layer of pyroelectric material called aluminum nitride sitting directly below them. That voltage is then read by a bottom layer of a silicon semiconductor contact, which transmits the signal to a computer to analyze.

Cheaper, faster photodetectors

According to Mikkelsen, commercial photodetectors have been made with these types of pyroelectric materials before, but have always suffered from two major drawbacks. They haven’t been able to focus on specific electromagnetic frequencies, and the thick layers of aluminum nitride needed to create enough an electric signal have caused them to operate at very slow speeds.

“But our plasmonic detectors can be turned to any frequency and trap so much energy that they generate quite a lot of heat,” says Stewart. “That efficiency means we only need a thin layer of aluminum nitride, which greatly speeds up the process.”

The current standard for detection times in cameras leveraging pyroelectric materials is about 53 microseconds. Mikkelsen’s plasmonics-based approach sparked a signal in just a few nanoseconds, which is roughly 2,000 times faster. But because the experimental machinery limited those detection times, the new photodetectors might work even faster.

What could multispectral cameras do?

Mikkelsen sees several potential uses for commercial cameras based on the technology, because the process required to manufacture these photodetectors is relatively fast, inexpensive, and scalable.

Surgeons might use multispectral imaging to tell the difference between cancerous and healthy tissue during surgery. Food and water safety inspectors could use it to tell when dangerous bacteria have contaminated a chicken breast.

With the support of the Gordon and Betty Moore Foundation, Mikkelsen has set her sights on precision agriculture as a first target. While plants may only look green or brown to the naked eye, the light outside of the visible spectrum that reflects from their leaves and flowers contains a cornucopia of valuable information.

“Obtaining a ‘spectral fingerprint’ can precisely identify a material and its composition,” says Mikkelsen. “Not only can it indicate the type of plant, but it can also determine its condition, whether it needs water, is stressed, or has low nitrogen content, indicating a need for fertilizer. It is truly astonishing how much we can learn about plants by simply studying a spectral image of them.”

Multispectral imaging could enable precision agriculture by allowing fertilizer, pesticides, herbicides, and water to go only where needed, saving water and money and reducing pollution.

Imagine a multispectral camera mounted on a drone mapping a field’s condition and transmitting that information to a tractor designed to deliver fertilizer or pesticides at variable rates across the fields.

It is estimated that the process currently used to produce fertilizer accounts for up to 2% of the global energy consumption and up to 3% of global carbon dioxide emissions. At the same time, researchers estimate that 50 to 60% of fertilizer produced goes to waste. Accounting for fertilizer alone, precision agriculture holds an enormous potential for energy savings and greenhouse gas reduction, not to mention the estimated $8.5 billion in direct cost savings each year, according to the United States Department of Agriculture.

Several companies are already pursuing these types of projects. For example, IBM is piloting a project in India using satellite imagery to assess crops in this manner. This approach, however, is very expensive and limiting, which is why Mikkelsen envisions a cheap, handheld detector that could image crop fields from the ground or from inexpensive drones.

“Imagine the impact not only in the United States, but also in low- and middle-income countries where there are often shortages of fertilizer, pesticides, and water,” says Mikkelsen. “By knowing where to apply those sparse resources, we could increase crop yield significantly and help reduce starvation.”

The Air Force Office of Scientific Research and the National Defense Science & Engineering Graduate Fellowship Program supported the work.

Source: Duke University