Gender bias may not affect our perception of whether robots are competent at different jobs, researchers report.

The studies also show, however, that people are not great at judging what robots can do well, regardless of how we assign them a gender.

Researchers originally intended to test for gender bias, that is, if people thought a robot believed to be female may be less competent at some jobs than a robot believed to be male and vice versa. But researchers discovered no significant sexism against the machines.

“This did surprise us. There was only a very slight difference in a couple of jobs but not significant. There was, for example, a small preference for a male robot over a female robot as a package deliverer,” says Ayanna Howard, a professor in and the chair of the School of Interactive Computing at the Georgia Institute of Technology and the principal investigator on both studies.

Although robots are not sentient, as people increasingly interface with them, we begin to humanize the machines. Howard studies what goes right as we integrate robots into society and what goes wrong, and much of both has to do with how the humans feel around robots.

How we treat robots

“Surveillance robots are not socially engaging, but when we see them, we still may act like we would when we see a police officer, maybe not jaywalking and being very conscientious of our behavior,” says Howard, who is also chair and professor in bioengineering at the School of Electrical and Computer Engineering.

“Then there are emotionally engaging robots designed to tap into our feelings and work with our behavior. If you look at these examples, they lead us to treat these robots as if they were fellow intelligent beings.”

It’s a good thing robots don’t have feelings because what study participants lacked in gender bias they more than made up for in judgments against robot competence. That predisposition was so strong that Howard wondered if it may have overridden any potential gender biases against robots—after all, social science studies show that gender biases are still prevalent with respect to human jobs, even if implicit.

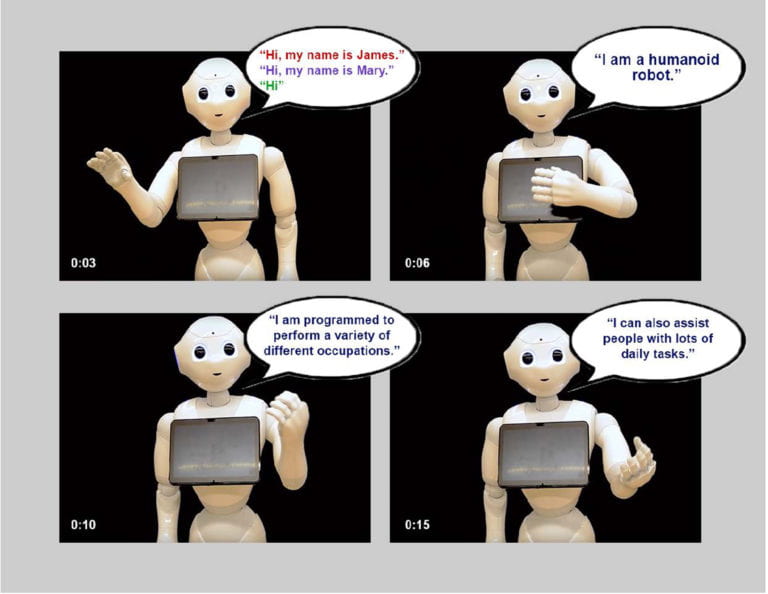

In questionnaires, humanoid robots introduced themselves via video to randomly recruited online survey respondents, who ranged from their twenties to their seventies and were mostly college educated. The humans ranked robots’ career competencies, only trusting the machines to competently perform a handful of simple jobs.

Misunderstanding what bots do best

“The results baffled us because the things that people thought robots were less able to do were things that they do well. One was the profession of surgeon. There are Da Vinci robots that are pervasive in surgical suites, but respondents didn’t think robots were competent enough,” Howard says. “Security guard—people didn’t think robots were competent at that, and there are companies that specialize in great robot security.”

Cumulatively, the 200 participants across the two studies thought robots would also fail as nannies, therapists, nurses, firefighters, and totally bomb as comedians. But they felt confident bots would make fantastic package deliverers and receptionists, pretty good servers, and solid tour guides.

The researchers could not say where the competence biases originate. Howard could only speculate that some of the bad rap may have come from media stories of robots doing things like falling into swimming pools or injuring people.

Gendering robots

Despite the lack of gender bias, participants readily assigned genders to the humanoid robots. For example, people accepted gender prompts by robots introducing themselves in videos.

If a robot says, “Hi, my name is James,” in a male sounding voice, people mostly identified the robot as male. If it says, “Hi, my name is Mary,” in a female voice, people mostly says it was female.

Some robots greeted people by saying “Hi” in a neutral sounding voice, and still, most participants assigned the robot a gender. The most common choice was male, followed by neutral, then by female. For Howard, this was an important take-away from the study for robot developers.

“Developers should not force gender on robots. People are going to gender according to their own experiences. Give the user that right. Don’t reinforce gender stereotypes,” Howard says.

Gendering is only one aspect of robot humanization, something we do altogether too much, Howard says. Some in her field advocate for not building robots in humanoid form at all in order to discourage it, but Howard did not take it that far.

“Robots can be good for social interaction. They could be very helpful in elder care facilities to keep people company. They might also make better nannies than letting the TV babysit the kids,” says Howard, who also defended robots’ comedic talent, provided they’re programmed for that.

“If you ever go to an amusement park, there are animatronics that tell really good jokes.”

The researchers submitted the two studies to conferences that were canceled due to COVID-19. The first appears in the Proceedings of 2020 ACM Conference on Human-Robot Interaction. The second appears in CHI 2020 Extended Abstracts.

Funding for the research came from the National Science Foundation and the Alfred P. Sloan Foundation. Any findings, conclusions, or recommendations are those of the authors and not necessarily of the sponsors.

Source: Georgia Tech