A machine learning algorithm uses computational topology to study how cells in the embryo organize themselves into tissue-like architectures.

For healthy tissue to form, cells in the embryo must organize themselves in the right way in the right place at the right time. When this process doesn’t go right, it can result in birth defects, impaired tissue regeneration, or cancer. All of which makes understanding how different cell types organize into a complex tissue architecture one of the most fundamental questions in developmental biology.

While researchers are still some distance from fully understanding the process, a group of Brown University scientists has spent the past few years helping the field inch closer. Their secret? A branch of mathematics called topology.

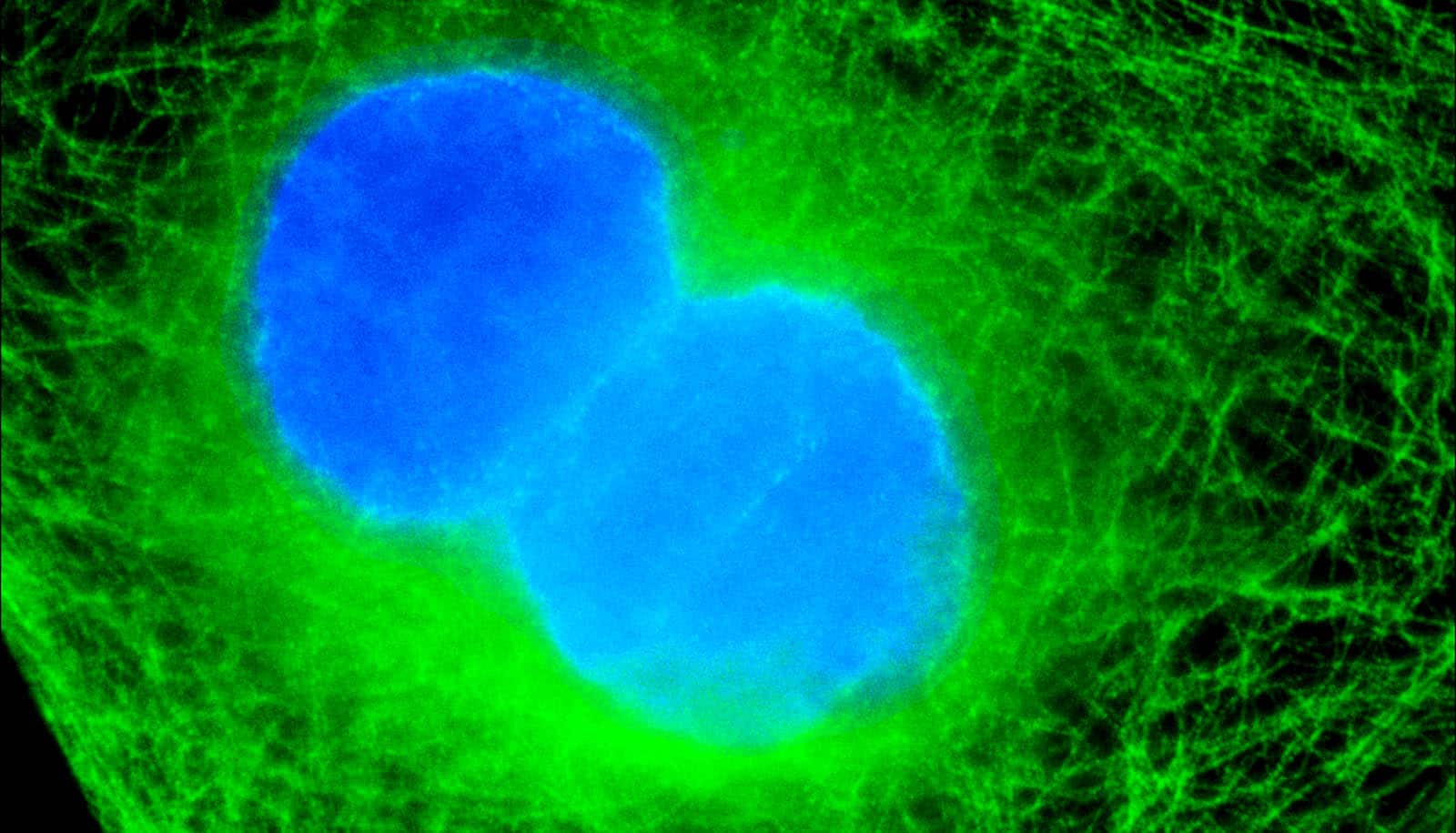

The research team, made up of biomedical engineers and applied mathematicians, created a machine learning algorithm using computational topology that profiles shapes and spatial patterns in embryos to study how these cells organize themselves into tissue-like architectures. In a new study, they take that system to the next level, opening a path to studying how multiple types of cells assemble themselves.

The work appears in npj Systems Biology and Applications.

“In tissues, there may be differences in how one cell adheres to the same cell type, relative to how it adheres to a different cell type,” says Ian Y. Wong, an associate professor in Brown’s School of Engineering who helped develop the algorithm. “There’s this interesting question of how these cells know exactly where to end up within a given tissue, which is often spatially compartmentalized into distinct regions.”

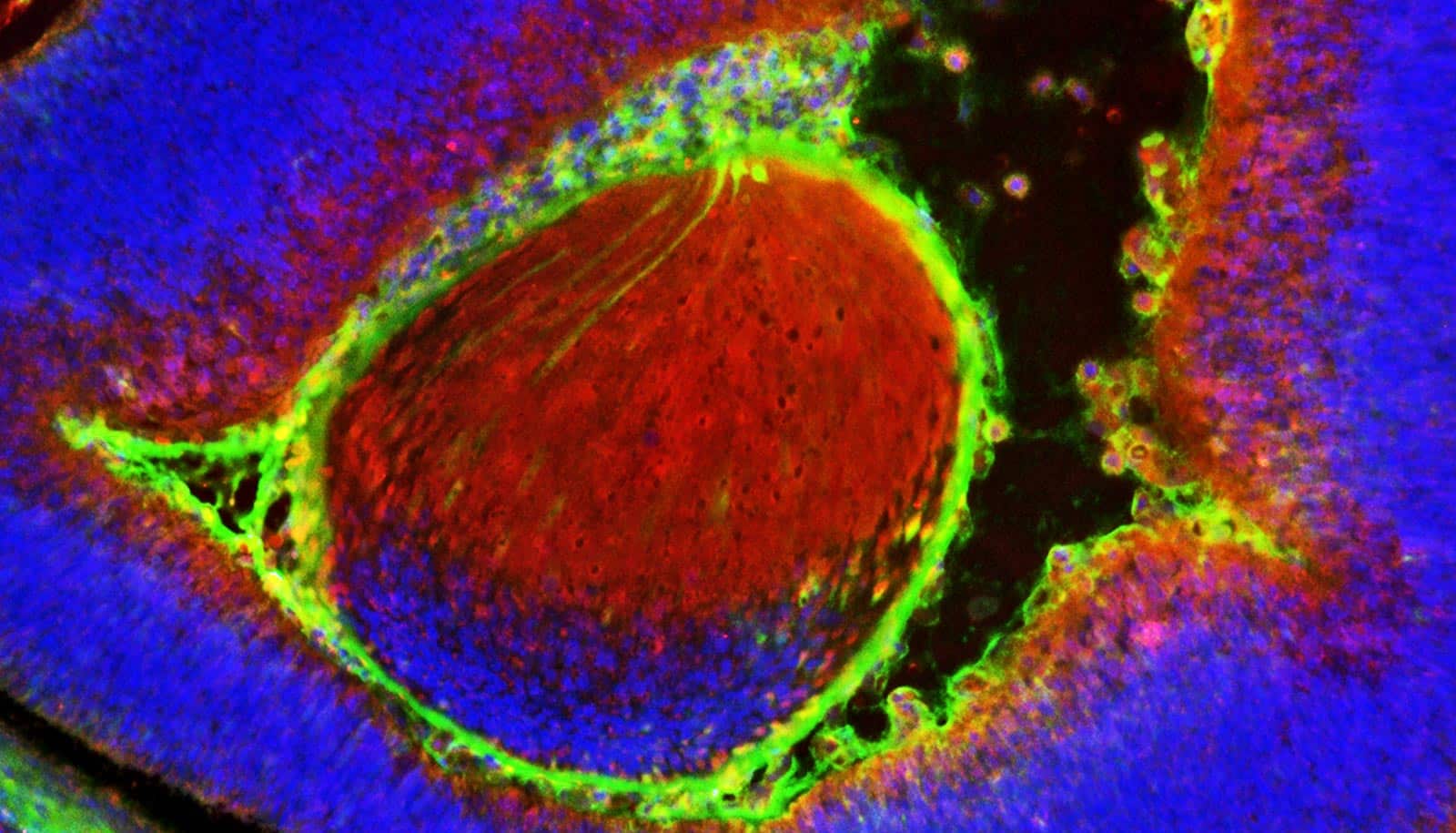

For example, in an animal embryo, the outer layer of cells goes on to form skin, the middle layer forms muscle and bone, while the innermost layer forms the liver or lungs. Cells within each layer will preferentially adhere to each other, sorting apart from cells in other layers that go on to form other parts of the body.

In the 1970s, scientists discovered that cells within frog embryos could be gently separated apart and when they were mixed back together, they would spontaneously rearrange into their initial organization. This occurs because the cells have different affinities for each other, and as they assemble and cluster, certain topological patterns of linkages and loops are preserved.

“In the context of these spatial arrangements of tissues, you can learn a lot from what’s there, but also from what’s not there at the same time,” says Dhananjay Bhaskar, a recent Brown PhD graduate who led the work and is now a postdoctoral researcher at Yale University.

The Brown researchers showed in 2021 how their approach can profile the topological traits of one cell type that organizes into different spatial configurations and could make predictions on it.

In the new study, the research team use persistence images to enable rapid comparisons across large datasets of cell positions.

The hiccup with the original system was that it is a slow and labor-intensive process. The algorithm painstakingly compared these topological features one-by-one against those in other sets of cell positions to determine how topologically different or similar they are. The process took several hours and, essentially, held the algorithm back from its full potential in understanding how cells assemble themselves, and from being able to easily and accurately compare what happens when conditions change—a key in breaking down what happens when things go wrong.

In the new study, the research team begins to address that limitation with what’s called persistence images. These images are a standardized picture-like format for representing topological features, enabling rapid comparison across large datasets of cell positions.

They then used those images to train other algorithms to generate “digital fingerprints” that capture the key topological features of the data. This drives down the computation time from hours to seconds, enabling the researchers to compare thousands of simulations of cell organization by using the fingerprints to classify them into similar patterns without human input.

The researchers say the goal is to work backward and infer the rules that describe how different cell types arrange themselves based on the final pattern. For instance, if they tinker with how certain cells are more adhesive or less adhesive, the researchers can identify how and when dramatic alterations occur in tissue architecture.

The approach has the potential to be applied to understanding what happens when the developmental process goes off track and for laboratory experiments testing how different drugs can alter cell migration and adhesion.

“If you can see a certain pattern, we can use our algorithm to tell why that pattern emerges,” Bhaskar says. “In a way, it’s telling us the rules of the game when it comes to cells assembling themselves.”

The research had support from Brown University’s Data Science Institute, the National Institute of General Medical Sciences, and the National Science Foundation. The research took place using computational resources and services at Brown’s Center for Computation and Visualization.

Source: Brown University