New research involving neuroimaging and AI describes the complex network within the brain that comprehends the meaning of a spoken sentence.

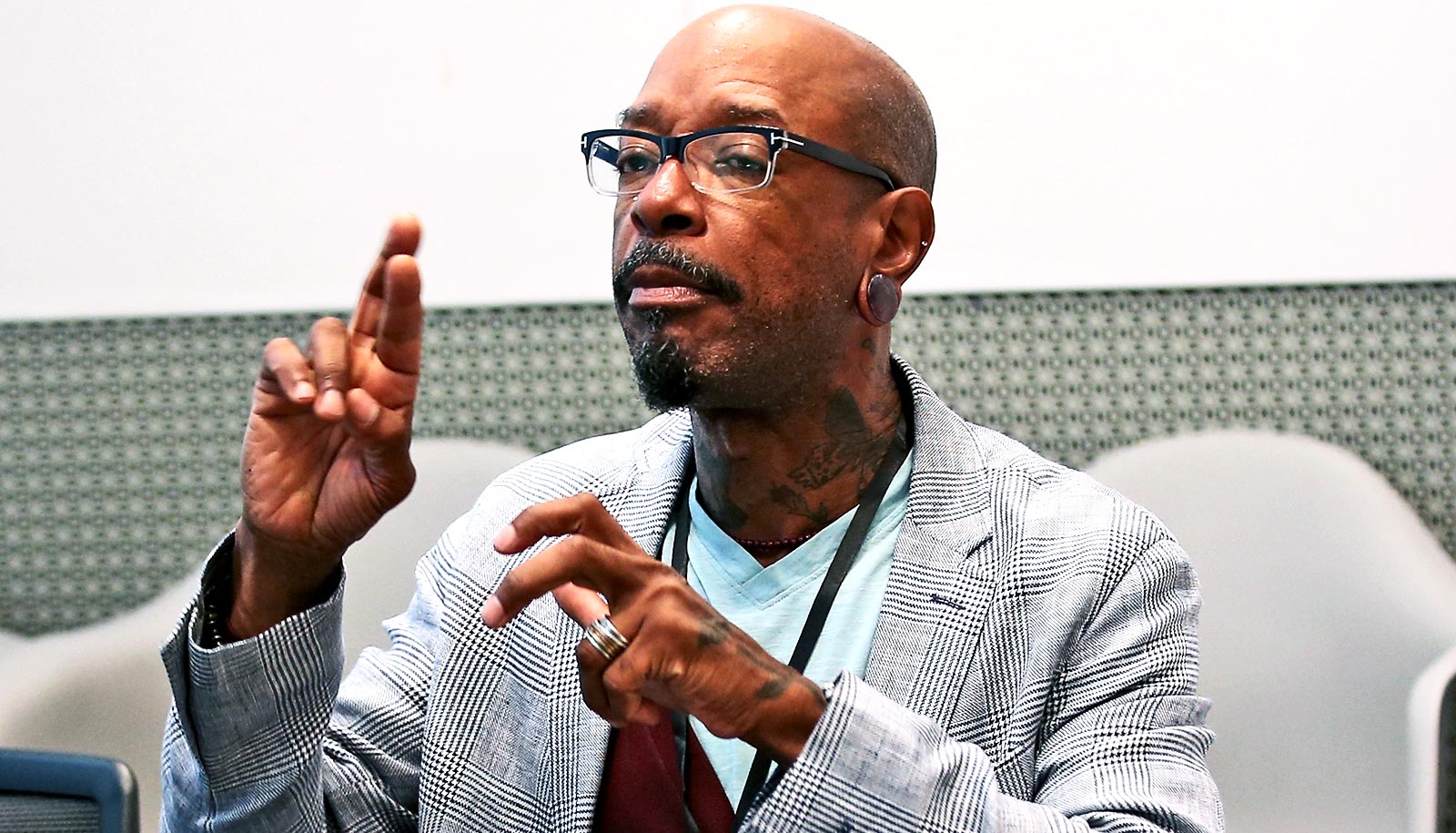

“It has been unclear whether the integration of this meaning is represented in a particular site in the brain, such as the anterior temporal lobes, or reflects a more network level operation that engages multiple brain regions,” says Andrew Anderson, research assistant professor in the University of Rochester Del Monte Institute for Neuroscience and lead author of of the study in the Journal of Neuroscience.

“The meaning of a sentence is more than the sum of its parts. Take a very simple example—’the car ran over the cat’ and ‘the cat ran over the car’—each sentence has exactly the same words, but those words have a totally different meaning when reordered.”

The study is an example of how the application of artificial neural networks, or AI, are enabling researchers to unlock the extremely complex signaling in the brain that underlies functions such as processing language.

The researchers gathered brain activity data from study participants who read sentences while undergoing fMRI. These scans showed activity in the brain spanning across a network of different regions—anterior and posterior temporal lobes, inferior parietal cortex, and inferior frontal cortex. Using the computational model InferSent—an AI model developed by Facebook trained to produce unified semantic representations of sentences—the researchers could predict patterns of fMRI activity reflecting the encoding of sentence meaning across those brain regions.

“It’s the first time that we’ve applied this model to predict brain activity within these regions, and that provides new evidence that contextualized semantic representations are encoded throughout a distributed language network, rather than at a single site in the brain.”

Anderson and his team believe the findings could help understand clinical conditions.

“We’re deploying similar methods to try to understand how language comprehension breaks down in early Alzheimer’s disease. We are also interested in moving the models forward to predict brain activity elicited as language is produced. The current study had people read sentences, in the future we’re interested in moving forward to predict brain activity as people might speak sentences.”

Additional coauthors are from the University of Rochester, Facebook AI Research, and the Medical College of Wisconsin. Support for the research came from the Del Monte Institute for Neuroscience’s Schimtt Program on Integrative Neuroscience and the Intelligence Advanced Research Projects Activity.

Source: University of Rochester