A new algorithm could let drones independently film spectacular action scenes.

Take, for example, the scene in Skyfall in which James Bond fights his adversary on the roof of a train as it races through the desert that includes a series of rapidly changing camera angles. Several camera operators worked for hours on end at a number of different locations. And a camera crane even had to be mounted on the train’s roof for the spectacular close-up shots.

Tobias Nägeli, a doctoral student at ETH Zurich in the Advanced Interactive Technologies Lab, is convinced that these scenes can be filmed with fewer resources. Together with researchers from Delft University of Technology and ETH spin-off Embotech, he has developed an algorithm that enables drones to film dynamic scenes independently in the way that directors and cinematographers intend.

Drones have been used in filming for a number of years, but good camera shots typically require two experienced experts—one to pilot the drone and one to control the camera angle. This is not only laborious but also expensive. It is true that commercial camera drones already exist that can follow a predefined person independently. “But this means that the director loses control over the shooting angle, as well as the option to keep several people in the image at once,” says Nägeli. “That’s why we’ve developed an intuitive control system.”

To explain how this works, Nägeli draws an analogy with robotic vacuum cleaners: “We don’t specify the exact path that the robot should take. We simply define the objective: that the room should be clean at the end of the process.” If we apply this analogy to film, it means that the director is not concerned with exactly where the drone is at a specific point in time. The most important thing is that the final shot meets their expectations.

This process of translation between cinematographer and drone is the job of Nägeli’s algorithm. Parameters such as the shooting angle, the person to follow, or tracking shots by the crane and camera are definable before the flight. For safety purposes, these parameters are combined with spatial boundaries within which the drone can move freely. The precise path—and the timing of changes of direction—are recalculated by the drone 50 times per second, with GPS sensors providing the necessary data.

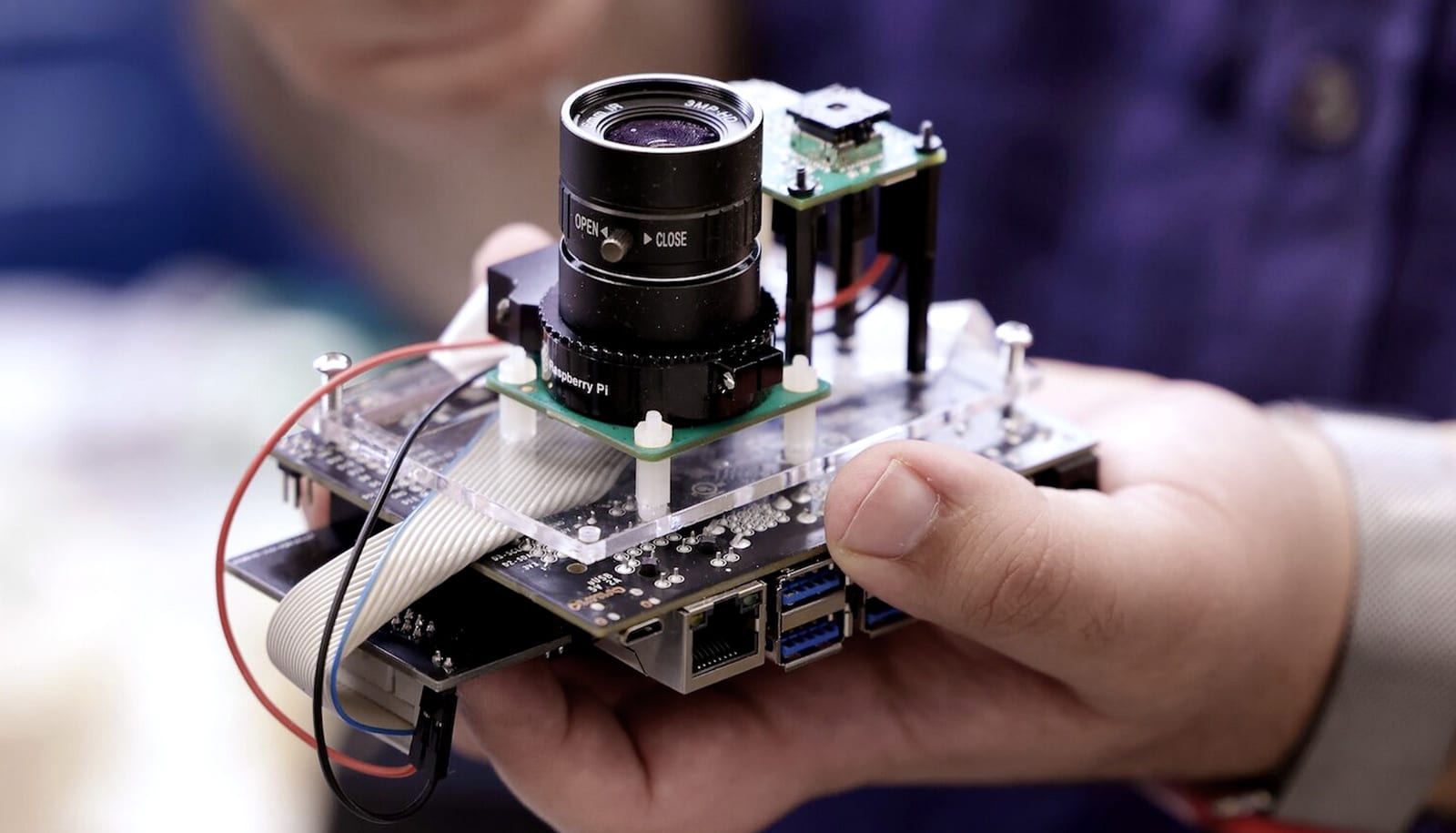

For the first proof of concept, Nägeli used a simple drone that is available to buy online for less than $500. The algorithm does not run on the drone itself, but rather on an external laptop that is connected to the drone by radio via a directional aerial. This allows flights with a range of up to one and a half kilometers. “That’s sufficient for most applications,” says Nägeli.

Algorithm predicts Batman vs. Superman investors won’t be happy

As reported in IEEE Robotics and Automation Letters, Nägeli demonstrated in collaboration with researchers from Massachusetts Institute of Technology (MIT) that the drone can perform predefined shots independently, taking into account the image area and the position and angle of an actor within it.

The drone also identifies obstacles and avoids them automatically. For a second paper in ACM Transactions on Graphics, Nägeli commissioned filmmaker Christina Welter from Zurich University of the Arts (ZHdK) to outline a scene—with a predetermined plot—that would normally require multiple cameras and rails for tracking shots. Nägeli programmed the stage directions into two drones, which communicated between themselves.

In this way, he was able to film shots that are traditionally hard to implement, such as a tracking shot through an open window and filming with two cameras in a confined indoor space. Through appropriate programming, Nägeli was also able to prevent the drones from flying into each other’s shots.

The ZHdK cinematographers were skeptical about Nägeli’s innovation. After all, the art of good picture composition is a hard-learned craft. “However, we do not want to replace the director or the cinematographers,” Nägeli explains. “Rather, our system is intended to expand the range of tools available to filmmakers and allow them to take shots that were previously impossible or extremely laborious.”

Ski slopes and factories

Nägeli thinks that the algorithms could see their first application not in a film studio but in television sports broadcasting; for example, ski races. “This is an area with a huge demand for dynamic shots,” says Nägeli. “But manually piloted film drones can present a hazard for the athletes, as we’ve seen from drone crashes in the past.”

5 big questions about the science of Star Wars

That is why “spidercams” are typically used today; for example, at the World Ski Championships in St. Moritz. There, the camera travels along a cable suspended above the athlete; however, this is not without risk either, as demonstrated when a plane collided with the installation in St. Moritz in February. “We are actually doing the same thing as spidercams, but we do it virtually and without cables,” says Nägeli. “We can create a virtual flight path that prevents the drone from coming within a minimum safety distance of the athlete.”

The algorithms could also be used for inspection of industrial facilities; for example, in the case of wind turbines that are examined for defects using drones. Or for transport purposes: it would be possible to define air corridors that could be used to transport blood or donor organs safely in an emergency. “The drone could identify the fastest and safest flight path independently within this corridor.”

50 drones at a time

Back in the world of film, however, how will this technology change the industry in the longer term? Will scenes lasting several minutes soon be filmed exclusively with drones? “I see no reason why not,” says Nägeli. “It’s already possible to synchronize 50 drones at once.

“Using our algorithm, they can all be programmed to shoot precisely the images that the director wants.”

Would it therefore be possible to film, for example, the heroic battle on the moving train in Skyfall without mounted camera systems and without an army of camera operators? “At present, the algorithm is still in its infancy,” explains Nägeli. “But with appropriate investment in the technology and a dedicated team, we could reach that stage in one or two years.”

Nägeli is currently thinking of founding a spin-off to market the technology after he finishes his doctoral thesis, and ETH Zurich has already applied to patent the algorithm. He is convinced that it could be of interest to media and film productions, as illustrated by a research project in collaboration with Deutsche Welle and Italian broadcaster RAI, which the EU funds as part of Horizon 2020.

Source: Samuel Schlaefli for ETH Zurich