A new camera system doesn’t need a long lens to take a detailed micron-resolution image of a faraway object.

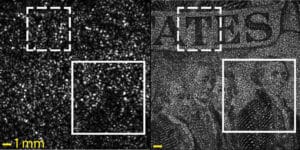

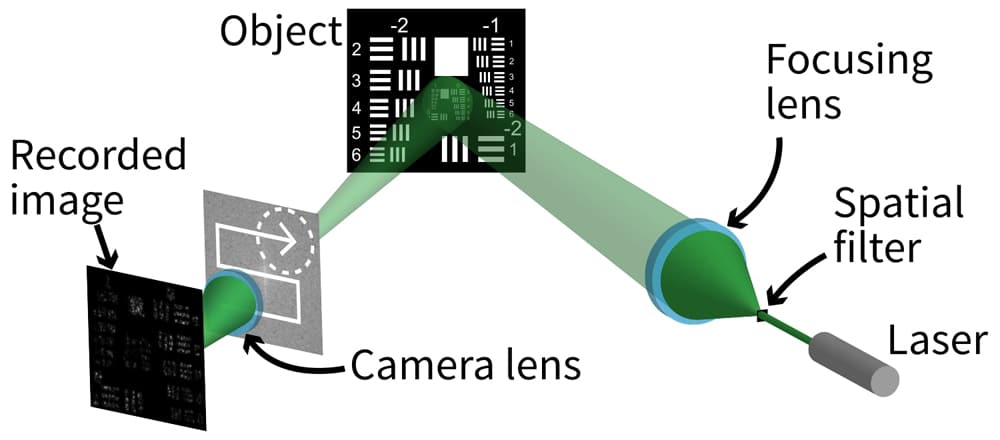

The prototype reads a spot illuminated by a laser and captures the “speckle” pattern with a camera sensor. Raw data from dozens of camera positions feed into a computer program that interprets it and constructs a high-resolution image.

The system known as SAVI—for “Synthetic Apertures for long-range, subdiffraction-limited Visible Imaging”—only works with coherent illumination sources such as lasers, but it’s a step toward a SAVI camera array for use in visible light, says Ashok Veeraraghavan, assistant professor of electrical and computer engineering at Rice University.

“Today, the technology can be applied only to coherent (laser) light,” he says. “That means you cannot apply these techniques to take pictures outdoors and improve resolution for sunlit images—as yet. Our hope is that one day, maybe a decade from now, we will have that ability.”

The technology is the subject of a paper in Science Advances.

Speckles and lasers

Labs led by Veeraraghavan at Rice and Oliver Cossairt at Northwestern University’s McCormick School of Engineering built and tested the device that compares interference patterns between multiple speckled images. Like the technique used to achieve The Matrix’s “bullet time” special effect, the images are taken from slightly different angles, but with one camera that moves between shots instead of many fired in sequence.

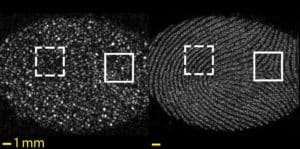

Veeraraghavan explains the speckles serve as reference beams and essentially replace one of the two beams used to create holograms. When a laser illuminates a rough surface, the viewer sees grain-like speckles in the dot. That’s because some of the returning light scattered from points on the surface has farther to go and throws the collective wave out of phase. The texture of a piece of paper—or even a fingerprint—is enough to cause the effect.

The researchers use these phase irregularities to their advantage.

New camera uses just 1 photon per pixel

“The problem we’re solving is that no matter what wavelength of light you use, the resolution of the image—the smallest feature you can resolve in a scene—depends upon this fundamental quantity called the diffraction limit, which scales linearly with the size of your aperture,” Veeraraghavan says.

“With a traditional camera, the larger the physical size of the aperture, the better the resolution,” he says. “If you want an aperture that’s half a foot, you may need 30 glass surfaces to remove aberrations and create a focused spot. This makes your lens very big and bulky.”

SAVI’s “synthetic aperture” sidesteps the problem by replacing a long lens with a computer program the resolves the speckle data into an image. “You can capture interference patterns from a fair distance,” Veeraraghavan says. “How far depends on how strong the laser is and how far away you can illuminate.”

“By moving aberration estimation and correction out to computation, we can create a compact device that gives us the same surface area as the lens we want without the size, weight, volume, and cost,” says Cossairt, an assistant professor of electrical engineering and computer science at Northwestern.

Cheaper than lenses?

Lead author Jason Holloway, a Rice alumnus who is now a postdoctoral researcher at Columbia University, suggests an array of inexpensive sensors and plastic lenses that cost a few dollars each may someday replace traditional telephoto lenses that cost more than $100,000. “We should be able to capture that exact same performance but at orders-of-magnitude lower cost,” he says.

Such an array would eliminate the need for a moving camera and capture all the data at once, “or as close to that as possible,” Cossairt says. “We want to push this to where we can do things dynamically. That’s what is really unique: There’s an avenue toward real-time, high-resolution capture using this synthetic aperture approach.”

Veeraraghavan says SAVI leans on work from Caltech and the University of California, Berkeley, which developed the Fourier ptychography technique that allows microscopes to resolve images beyond the physical limitations of their optics.

The SAVI team’s breakthrough was the discovery that it could put the light source on the same side as the camera rather than behind the target, as in transmission microscopy, Cossairt says.

“We started by making a larger version of their microscope, but SAVI has additional technical challenges. Solving those is what this paper is about,” Veeraraghavan says.

The National Science Foundation, the Office of Naval Research, and a Northwestern University McCormick Catalyst grant supported the research.

Source: Rice University